The research focuses on the verification and synthesis of trustworthy and autonomous systems with high-level reasoning and resilient decision-making capabilities. Autonomous systems, including robots and other cyber-physical systems, are now increasingly deployed in dynamic, stochastic, and potentially adversarial environments to carry out complex mission specifications. Examples include search and rescue robots in contested environments, shared autonomous robots collaborating with human operators, and planning in a dynamic environment with uncontrollable events.

To enable intelligent decision-making in autonomous and semi-autonomous systems, the research project aims to bring together formal methods, game theory, and control to synthesize systems with provably performance guarantees with respect to high-level logical specifications. Specifically, the current research focuses on:

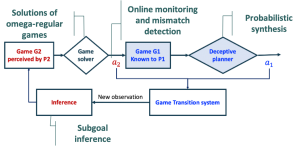

- Formal methods+ game theory for security in cyber and physical systems:To carry out tasks in an adversarial environment, the information (or the lack of information) plays a key role in strategic decision-making. In recent work, we developed a class of hypergames on graphs for modeling the adversarial interactions between an intelligent robot and its adversary, given the task specification in temporal logic and asymmetric information. We investigate the solution concepts of hypergames to design deceptive strategies that ensure to achieve the mission with provable guarantees. The concept of game-theory for deception and counter-deception has important applications in military operations, contested search and rescue, and the synthesis of secured cyber network systems (including industrical control system and other networks) using deception mechanism.

The framework of dynamic level-2 hypergame for deceptive planning [Li et al, T-ASE, 2020, Submitted.]

- Game-theory and probabilistic planning for shared autonomy: Shared autonomous system is a non-cooperative game between a human and the robot, with asymmetrical and incomplete information. The non-cooperative nature in interaction is because that the robot’s objective may partially align with the human’s objective. The asymmetrical and incomplete information is because the robot may not know if the operator is an expert or novice and if he/she is willing to adapt to the robot’s feedback and guidance. We propose to investigate game theory for mutual adaptation for human-robot teaming. This is an NSF project in collaboration with WPI HiRO lab and WPI Soft Robotics Lab. Related work will come up soon!

Related work:

- Abhishek N. Kulkarni, Jie Fu, Huan Luo, Charles A. Kamhoua, Nandi O. Leslie, “Decoy Placement Games on Graphs with Temporal Logic Objectives“, Conference on Decision and Game Theory for Security (GameSec), 2020.

- Abhishek N. Kulkarni, Jie Fu, ‘‘Deceptive Strategy Synthesis under Action Misperception in Reachability Games“, International Joint Conferences on Artificial Intelligence, 2020. acceptance rate 12.6%.

- Lening Li, Haoxiang Ma, Abhishek N. Kulkarni, Jie Fu, “Dynamic Hypergames for Synthesis of Deceptive Strategies with Temporal Logic Objectives”, submitted to IEEE Transactions on Automation Science and Engineering, 2020. ArXiv Preprint

- A. N. Kulkarni, H. Luo, N. O. Leslie, C. A. Kamhoua and J. Fu, “Deceptive Labeling: Hypergames on Graphs for Stealthy Deception,” in IEEE Control Systems Letters, vol. 5, no. 3, pp. 977-982, July 2021 (to appear). To be presented at IEEE CDC2020.

- Zhentian Qian, Jie Fu, Quanyan Zhu, “A Receding-Horizon MDP Approach for Performance Evaluation of Moving Target Defense in Networks“, In IEEE Conference on Control Technology and Applications (CCTA), 2020.

- Abhishek N. Kulkarni, Jie Fu, “Opportunistic Synthesis in Reactive Games under Information Asymmetry”, IEEE Conference on Decision and Control, 2019.