People prefer autonomous camera viewpoint control, but not autonomous camera selection

By Alexandra Valiton (2020/03)

Supervisory control of a humanoid robot in a manipulation task requires coordination of remote perception with robot action, which becomes more demanding with multiple moving cameras available for task supervision. We explore the use of autonomous camera control and selection to reduce operator workload and improve task performance in a supervisory control task. We design a novel approach to autonomous camera selection and control, and evaluate the approach in a user study which revealed that autonomous camera control does improve task performance and operator experience, but autonomous camera selection requires further investigation to benefit the operator’s confidence and maintain trust in the robot autonomy.

Publication

- Alexandra Valiton, Hannah Baez, Naomi Harrison, Justine Roy, and Zhi Li, “Active Telepresence Assistance for Supervisory Control: A User Study with a Multi-Camera Tele-Nursing Robot”, accepted by 2021 IEEE International Conference on Robotics and Automation (ICRA), 2021.

Various autonomous camera viewpoint control approaches

By Trevor Sherrard, Yicheng Yang and Jialin Song (2020/12),

Selected Project from RBE/CS 526 Human-Robot Interaction (Fall 2020)

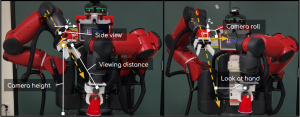

In this work, we design, implement and informally evaluate an autonomous camera viewpoint selection paradigm for assisting a user in performing complex tasks through a teleoperation interface. This interface consists of two robot arms, an eye-in hand autonomous camera arm and a user controllable manipulation arm. While the user is performing various tasks remotely, the camera-in-hand robot arm attempts to provide the best possible viewpoint to the user. Our implemented viewpoint selection algorithm attempts to maximize image saliency, and minimize occlusions from the manipulator and surrounding environment all while minimizing sudden and disorienting movements. We also demonstrate an effective design and implementation of a task specific viewpoint selection state machine. We implement an intuitive web based GUI for use on mobile devices and tablets to increase the accessibility of controlling the robot to non-subject matter experts. We will discuss the application impacts of our work specifically to the fields of telenursing and telesurgery robotics.

Demo