Shared Autonomous Mobile Humanoid Robots for Remote Nursing and Living Assistance

- [2022] Recently, we integrated a new mobile humanoid robot platform. Check IONA (Intelligent rObotic Nursing Assistant)!

- [2017] At WPI, we continue to improve the intelligence of robot autonomy and human-robot interface. Our work enables healthcare workers are novice to robot teleoperation to efficiently, intuitively and effortlessly control the robot to perform nursing assistance tasks that involve dexterous manipulation, navigation and active perception.

- [2015-2016] In response to the outbreak of highly infectious diseases, such as Ebola (2015) and Zika (2016), a Tele-Robotic Intelligent Nursing Assistant (TRINA) was developed at Duke University to enable healthcare workers to perform routine patient care tasks without direct contact, and to handle contaminated materials and protective gear. (see demo)

IONA – Intelligent rObotic Nursing Assistant

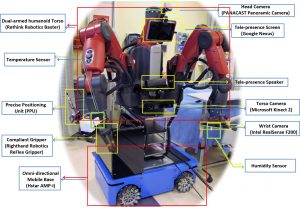

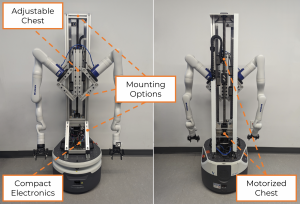

Our objective is to develop a versatile supporting structure that facilitates the flexible integration of system components and expands the manipulation workspace of robotic arms. We present IONA – Intelligent rObotic Nursing Assistant, a novel integration of a shared autonomous mobile humanoid robot for remote nursing assistance.

IONA features a motorized chest, which extends its workspace in Z-axis, a compact profile with a height of 1700 mm and the width of up to 850 mm, depending on the robotic arm mounting angle. The system supports various AR and VR control interfaces. [Demo]

Open-source ROS-Unity Simulation

We developed an open-source virtual testbed that integrates ROS- and Unity-based robot simulation. It features a virtual representation of IONA and human agents. The simulation renders a realistic hospital interior and facility, including nurse stations, patient, clinic, and storage rooms. With the focus on mobile manipulation, we developed various nursing assistance benchmark tasks, such as pushing a medical cart, disinfecting a surface, monitoring vital signs, and moving an IV stand. [Demo]

See our Github repository!

Shared Autonomy for Assisting Remote Robotic Manipulation

How to Reduce the Physical Workload in Teleoperation Via Motion Mapping?

How to Reduce the Physical Workload in Teleoperation Via Motion Mapping?

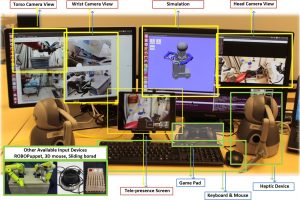

Tele-nursing robots provide a safe approach for patient-caring in quarantine areas. For effective nurse-robot collaboration, ergonomic teleoperation and intuitive interfaces with low physical and cognitive workload must be developed. We propose a framework to evaluate the control interfaces to iteratively develop an intuitive, efficient, and ergonomic teleoperation interface. The framework is a hierarchical procedure that incorporates general to specific assessment and its role in design evolution. We first present pre-defined objective and subjective metrics used to evaluate three representative contemporary teleoperation interfaces. The results indicate that teleoperation via human motion mapping outperforms the gamepad and stylus interfaces. The trade-off with using motion mapping as a teleoperation interface is the non-trivial physical fatigue. To understand the impact of heavy physical demand during motion mapping teleoperation, we propose an objective assessment of physical workload in teleoperation using electromyography (EMG). We find that physical fatigue happens in the actions that involve precise manipulation and steady posture maintenance. We further implemented teleoperation assistance in the form of shared autonomy to eliminate the fatigue-causing component in robot teleoperation via motion mapping. The experimental results show that the autonomous feature effectively reduces the physical effort while improving the efficiency and accuracy of the teleoperation interface.

Selected Publications

- Tsung-Chi Lin, Achyuthan Unni Krishnan and Zhi Li, “Intuitive, Efficient and Ergonomic Tele-Nursing Robot Interfaces: Design Evaluation and Evolution”, ACM Transactions on Human-Robot Interaction, Accepted on May 2021. PDF

- Tsung-Chi Lin, Achyuthan Unni Krishnan and Zhi Li, “Shared Autonomous Interface for Reducing Physical Effort in Robot Teleoperation via Human Motion Mapping“, in 2020 IEEE International Conference on Robotics and Automation (ICRA), 2020. PDF [Demo]

- Tsung-Chi Lin, Achyuthan Unni Krishnan and Zhi Li, “Physical Fatigue Analysis of Assistive Robot Teleoperation via Whole-body Motion Mapping“, in 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 2240–2245, IEEE, 2019 PDF [Demo]

Perception and Action Assistance for Freeform Tele-manipulation Control

Perception and Action Assistance for Freeform Tele-manipulation Control

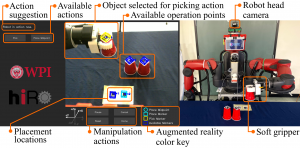

Teleoperation enables controlling complex robot systems remotely, providing the ability to impart human expertise from a distance. However, these interfaces can be complicated to use as it is difficult to contextualize information about robot motion in the workspace from the limited camera feedback of the workspace. Thus, it is required to study the best manner in which assistance can be provided to the operator that reduces interface complexity and effort required for teleoperation. Some techniques that provide assistance to the operator while freeform teleoperating include: 1) perception augmentation, like augmented reality visual cues and additional camera angles, increasing the information available to the operator; 2) action augmentation, like assistive autonomy and control augmentation, optimized to reduce the effort required by the operator while teleoperating. In this paper we investigate:1) Which aspects of dexterous tele-manipulation require assistance 2) The impact of perception and action augmentation in improving teleoperation performance; 3) What factors impact the usage of assistance and how to tailor these interfaces based on the operators’ needs and characteristics. The findings from this user study and resulting post-study surveys will help us optimize a design for teleoperation assistance that is more task and user specific. (see demo)

Selected Publications

- Tsung-Chi Lin, Achyuthan Unni Krishnan and Zhi Li, “Perception and Action Augmentation for Teleoperation Assistance in Freeform Tele-manipulation”, Submitted to ACM Transactions on Human-Robot Interaction, 2022/07.

- Tsung-Chi Lin, Achyuthan Unni Krishnan and Zhi Li. “Impact of Unreliable Assistive Autonomy on Shared and Supervisory Control for Remote Robot Manipulation.”, Submitted to 2023 International Conference on Robotics and Automation (ICRA), 2023.

- Achyuthan Unni Krishnan, Tsung-Chi Lin, and Zhi Li. “Design Interface Mapping for Efficient Free-form Tele-manipulation.” In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2022). 2022. PDF

- Lin, Tsung-Chi, Achyuthan Unni Krishnan, and Zhi Li. “Comparison of Haptic and Augmented Reality Visual Cues for Assisting Tele-manipulation.” In 2022 International Conference on Robotics and Automation (ICRA), pp. 9309-9316. IEEE, 2022. PDF [Demo]

Perception and Action Assistance for Supervisory Control of Robotic Manipulation

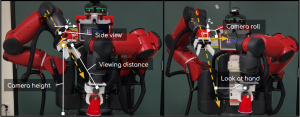

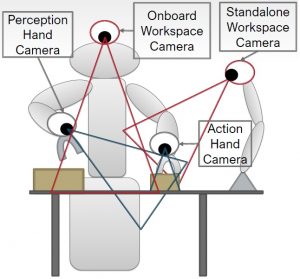

Supervisory control of a humanoid robot in a manipulation task requires coordination of remote perception with robot action, which becomes more demanding with multiple moving cameras available for task supervision. We explore the use of autonomous camera control and selection to reduce operator workload and improve task performance in a supervisory control task. We design a novel approach to autonomous camera selection and control. Our user study shows that autonomous camera control does improve task performance and operator experience, but autonomous camera selection requires further investigation to benefit the operator’s confidence and maintain trust in the robot autonomy. (see demo)

We also developed a graphical user interface for high-level robot control. The framework of the interface enables teleoperators to control a robot at the action level, and incorporates a simple but effective design that enables teleoperators to recover from task failure in a number of ways. Our user study with N = 25 participants show that high-level interface able to handle the most frequent errors is resilient to the effects of unreliable robot autonomy. Although the total task completion time increased as the robot autonomy becomes unreliable, the users’ perception of workload and task performance are not affected.

Selected Publication

- Alexandra Valiton, Hannah Baez, Naomi Harrison, Justine Roy, and Zhi Li, “Active Telepresence Assistance for Supervisory Control: A User Study with a Multi-Camera Tele-Nursing Robot”, IEEE International Conference on Robotics and Automation (ICRA), 2021. PDF

- Samuel White, Keion Bisland, Michael Collins, and Zhi Li, “Design of a High-level Teleoperation Interface Resilient to the Effects of Unreliable Robot Autonomy“, In 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 11519-11524, IEEE, 2020. PDF

Perception-Action Coordination When Using Active Telepresence Cameras

Perception-Action Coordination When Using Active Telepresence Cameras

Teleoperation enables complex robot platforms to perform tasks beyond the scope of the current state-of-the-art robot autonomy by imparting human intelligence and critical thinking to these operations. For seamless control of robot platforms, it is essential to facilitate optimal situational awareness of the workspace for the operator through active telepresence cameras. However, the control of these active telepresence cameras adds an additional degree of complexity to the task of teleoperation. In this paper we present our results from the user study that investigates: 1) how the teleoperator learns or adapts to performing the tasks via active cameras modeled after camera placements on the TRINA humanoid robot; 2) the perception-action coupling operators implement to control active telepresence cameras, and 3) the camera preferences for performing the tasks. These findings from the human motion analysis and post-study survey will help us determine desired design features for robot teleoperation interfaces and assistive autonomy. (see demo)

Selected Pulication

- Tsung-Chi Lin, Achyuthan Unni Krishnan and Zhi Li, “Perception-Motion Coupling in Active Telepresence: Human Behavior and Teleoperation Interface Design”, Accepted by ACM Transactions on Human-Robot Interaction, Accepted on Nov 2022. PDF

- Tsung-Chi Lin, Achyuthan Unni Krishnan and Zhi Li, “How People Use Active Telepresence Cameras in Tele-manipulation”, IEEE International Conference on Robotics and Automation (ICRA), 2021. PDF [Demo]

- Alexandra Valiton and Zhi Li, “Perception-Action Coupling in Usage of Telepresence Cameras“, in 2020 IEEE International Conference on Robotics and Automation (ICRA), pp. 3846-3852. IEEE, 2020, Finalist of Best Paper for Human-Robot Interaction PDF [Demo]

[2016] TRINA – Tele-Robotic Intelligent Nursing Assistance

Selected Publications

- Zhi Li, Peter Moran, Qingyuan Dong, Ryan Shaw, Kris Hauser, “Development of a Tele-Nursing Mobile Manipulator for Remote Care-giving in Quarantine Areas”, In 2017 IEEE International Conference on Robotics and Automation (ICRA), pp. 3581-3586. PDF

- Jianqiao Li, Zhi Li, Kris Hauser, “A Study of Bidirectionally Telepresent Tele-Action During Robot-Mediated Handover”, In 2017 IEEE International Conference on Robotics and Automation (ICRA), pp. 2890-2896. PDF