Project Overview:

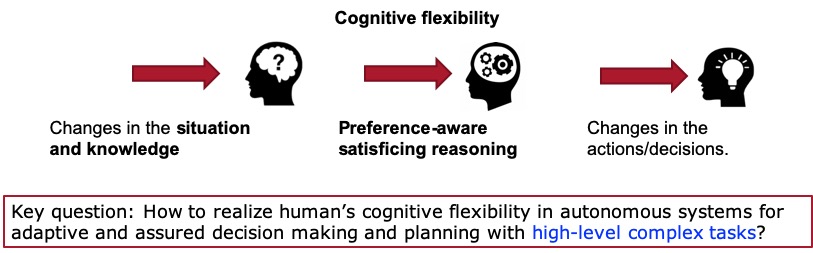

Humans excel autonomy in their cognitive flexibility to make satisficing decisions in uncertain and dynamic environments. Preference plays a key role in determining what goals or requirements to satisfy when not all desired goals can be achieved. However, in contested and stochastic environments, it maybe infeasible for the human operator to decide what objectives to be compromised in a timely manner. It is critical to leverage autonomy to augment human’s decision-making capability by providing decision support and action plans. Such level of intelligence can only be achieved if the autonomous system is equipped with human-like preference-based reasoning for satisficing planning.

This project aims at the major gap in theory and algorithms for preference-based decision-making in stochastic and dynamic environments, with complex mission objectives. Specifically, it focuses on three key questions: How to specify the human’s temporally evolving preferences and goals rigorously in formal logic? How to synthesize an autonomous system that provably satisfies the operator’s preferences in stochastic environments? How to make the system robust and adaptive when planning with initially incomplete knowledge about the preference and the uncertain environment?

Team member: Dr. Jie Fu (PI), Abhishek Kulkarni, and Xuan Liu (Ph.D. students)

We are actively looking for new graduate students to join this exciting project.

Acknowledgment:

Air Force Office of Scientific Research, YIP Award (2021— Now)

Related Publication: